Assuring a Responsible Future for AI

On 6th November 2024, the Department for Science, Innovation and Technology (DSIT) unveiled its report, Assuring a Responsible Future for AI. This pivotal publication examines the UK’s burgeoning AI assurance market, identifies significant opportunities for growth, and outlines how the government plans to leverage these opportunities to foster a thriving industry.

On 6th November 2024, the Department for Science, Innovation and Technology (DSIT) unveiled its report, Assuring a Responsible Future for AI. This pivotal publication examines the UK’s burgeoning AI assurance market, identifies significant opportunities for growth, and outlines how the government plans to leverage these opportunities to foster a thriving industry.

With projections suggesting the market could exceed £6.53 billion by 2035 if barriers are addressed, the report is a key resource for those invested in the future of artificial intelligence.

Click here to read the report.

Robust AI, Safe for Society

Ethical and Robust: the IST is making AI safer for Society with our new professional registration scheme.

This accreditation is for all who work with AI models and has been developed by an interdisciplinary group. You can be an archeologist, philosopher or computer scientist – as long as you work on AI models in any capacity this accreditation works for you.

Practitioners can enter for Registered Technician, in mid- career as Registered Practitioner or at leadership level as Advanced Practitioner. Our accreditation verifies that practitioners are able to use AI robustly and ethically as well as raise and deal with modelling challenges.

Transforming Lives and Economies

Advances in Artificial Intelligence (AI) are reshaping the way we live, work, and interact. From revolutionising healthcare through improved diagnostics and treatment to supporting environmental conservation, the potential of AI is boundless. As a global leader in AI innovation, the UK aims to ensure that this rapidly evolving technology not only scales globally but also operates responsibly and inclusively.

The UK government sees AI as central to its ambitions of driving economic growth, transforming public services, and improving living standards. Harnessing AI responsibly is essential to maximise these benefits while addressing the inherent risks, such as bias, privacy concerns, and socio-economic impacts like job displacement.

AI assurance is a cornerstone of responsible AI adoption. It provides tools and techniques to measure, evaluate, and communicate the trustworthiness of AI systems, ensuring compliance with existing and emerging regulations. This process fosters confidence among consumers, industry stakeholders, and regulators, unlocking broader AI adoption across sectors.

The assurance ecosystem itself represents a lucrative economic opportunity. For example, the UK’s mature cyber security assurance sector contributes nearly £4 billion annually to the economy. The government envisions similar success for AI assurance, with potential for significant growth by addressing current market challenges.

Key Findings from the Report

The report is the first to comprehensively assess the state of the UK’s AI assurance market and its future potential. It highlights:

- Market Size and Growth: The UK AI assurance market currently includes an estimated 524 firms generating £1.01 billion annually and employing over 12,500 people. It outpaces other leading markets, such as the US, Germany, and France, relative to economic size.

- Growth Potential: Projections suggest the market could exceed £6.53 billion by 2035, provided barriers to growth are addressed.

- Challenges: Limited demand, lack of quality infrastructure, and fragmented frameworks and terminology are inhibiting growth. Addressing these will be key to unlocking the market’s full potential.

Government Action Plan

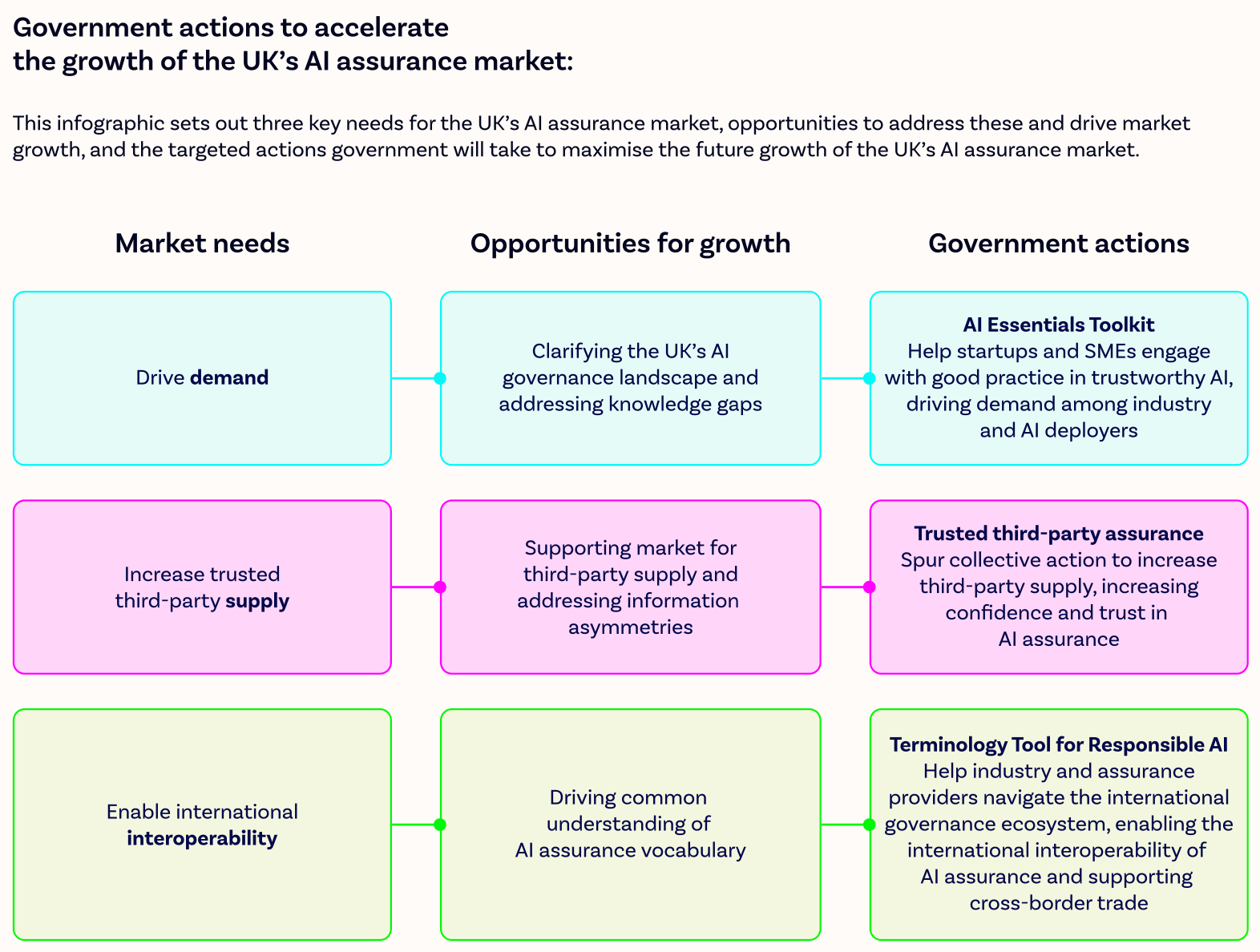

The DSIT has outlined targeted measures to support the AI assurance market and ensure responsible AI adoption:

- Boosting Demand: The creation of an AI Assurance Platform will act as a one-stop resource for information and tools, including an AI Essentials Toolkit for startups and SMEs. This initiative aims to increase awareness and demand for AI assurance services across sectors.

- Increasing Supply: Collaborations with industry stakeholders and the AI Safety Institute will focus on developing high-quality third-party assurance tools. A “Roadmap to Trusted Third-Party AI Assurance” will guide this effort, spurring innovation and investment.

- Enhancing Interoperability: A Terminology Tool for Responsible AI will harmonise industry and regulatory language, supporting the global scalability of the UK’s AI assurance sector.

A Responsible Future for AI

AI is poised to transform the UK economy and society, with its potential projected to reach over $1 trillion by 2035. However, realising this potential requires a proactive approach to ensure safe and equitable development and deployment. By investing in AI assurance, the UK can build a foundation of trust, enabling responsible AI innovation to flourish both domestically and globally.

The DSIT’s Assuring a Responsible Future for AI report marks a significant step forward in this journey, providing a roadmap to unlock the immense opportunities that lie ahead. With targeted action and collaboration across sectors, the UK is well-positioned to lead the way in creating a future where AI benefits are widely shared and responsibly harnessed.